19 Adaptive Randomisation In Population D-E-R Trials; Why We Should Learn As We Go

At the end of this chapter, the reader will understand:

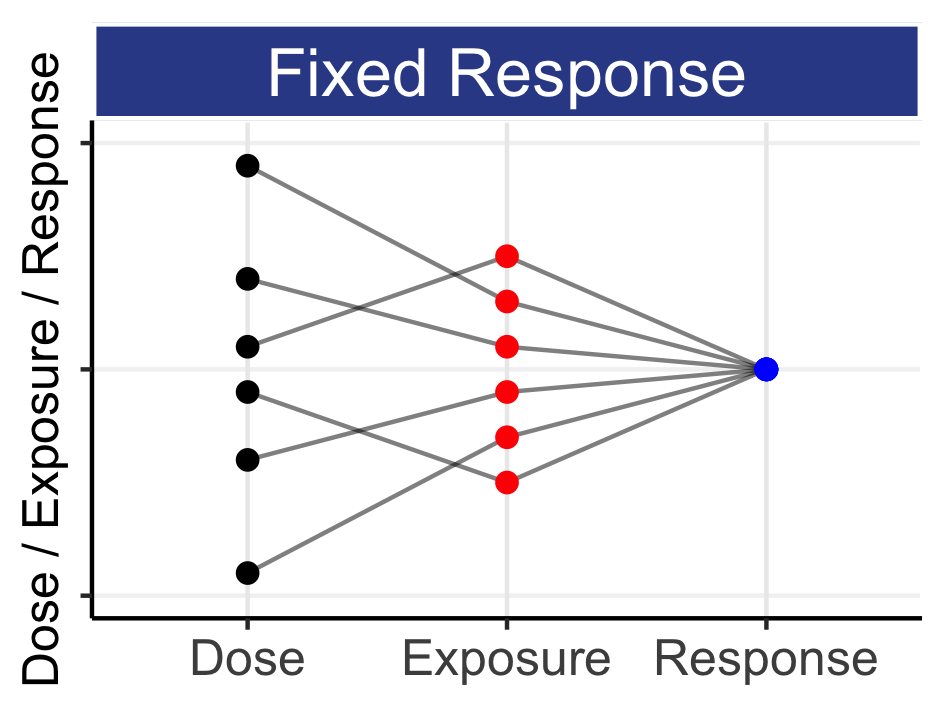

To accurately and precisely quantity the Population D-E-R relationships as efficiently and quickly as possible, doses should be placed at the optimal dose levels (the doses that are most informative).

These optimal dose levels depend on the true Population D-E-R relationships that we seek to quantify; thus to be most efficient (and ethical) we must “learn as we go”.

Adaptive randomisation is the most efficient way to “learn as we go”. We start with a randomisation schedule that supports a very wide dose range, and then “zero in” on the optimal (most informative) doses.

To implement adaptive randomisation, we need a team similar to a Data Monitoring Committee to review the accruing data and update the randomisation allocations.

Adaptive randomisation is most valuable when our initial assumptions about the location and shape of the D-E-R relationships are imperfect (as they always are!).

Unsurprisingly, in drug development we need to investigate the right dose range for each drug. In addition, selecting the optimal dose levels within this dose range requires an understanding of the location and shape of the D-E-R relationships. We appear to be a chicken and egg situation; we can only optimally design a D-E-R trial with the information that we are seeking to obtain from the trial! The pharmaceutical industry is not the first industry to face such a challenge. For example, when Amazon introduces 100 new products, they have very limited understanding of how popular each will be, and hence how many units of each product they should order/stock in their warehouses, and ultimately how much profit each product will generate over the first year. What Amazon does not do is wait until the end of the year to assess the sales of each product. Such a foolish strategy would see them holding far too much stock of the least popular products, and failing to order additional stock for the most popular products. They would not be happy to see the most popular new product sell out by March, but delay reordering until December. The solution to this problem is to look at the accruing data on a continuous basis, and act accordingly. Thus Amazon may start with 100 new products, but will use daily/weekly sales to increase their orders for the most popular/profitable products, and reduce the orders for the least popular/profitable products. For the weakest products, these would be phased out of stock, and replaced with the more successful products. This may all seem quite obvious, and it is. Why would Amazon be so stupid as to wait until the end of the year to review their own sales data and act accordingly? Such a lazy strategy would be sure to be less successful than one that does adapt their product lines and orders.

Now if we substitute dose range for products, dose levels for the most popular/profitable products, and the warehouse for patients, I hope the analogy with Population D-E-R trials becomes evident. Initially we start with a wide range of doses (products). As we obtain the accruing data across this wide range, we can “zero in” on the best doses (most popular products), with new patients (stock) being randomised to (ordered for) the best doses (products).

The above process of “learning as we go” is describing one type of adaptive randomisation. It is the most efficient (and hence ethical) way to learn about Population D-E-R relationships.

Thus in a Population D-E-R trial we may start with 10 dose levels (e.g. placebo, 0.5mg, 1 mg, 2.5 mg, 5 mg, 10 mg, 20 mg, 50 mg, 100 mg and 200 mg), but ultimately end with a range that is more refined to the actual location of the D-E-R relationships, which may be towards the lower 5 doses (e.g. placebo, 0.5 mg, 1 mg, 2.5 mg, 5 mg and 10 mg), or towards the higher 5 doses (e.g. placebo, 10 mg, 20 mg, 50 mg, 100 mg and 200 mg). In these two simple cases, the two final dose ranges investigated are 20-fold different (i.e. 0.5-10 mg and 10-200 mg). There is a wide “margin of error” when we use adaptive randomisation; we are maximising our chances that we do indeed investigate the right dose range/levels.

Unfortunately this is not what happens in >95% of D-E-R trials run by the pharmaceutical industry. Instead, we wait until the end of the trial (=end of the year for Amazon) and then look at the data, to see if our initial guesses were reasonable. This is painfully wasteful in time, money and patient resources. To be direct, it is both inefficient and unethical in equal measure. Indeed, I am sceptical of how many D-E-R trials actually prospectively define their “initial guess” of the true location and shape of the D-E-R relationships for efficacy and safety, and then evaluate whether their trial design (dose levels and N) is actually capable of accurately and precisely quantifying the D-E-R relationships. Rather, most D-E-R designs I see appear to select a few convenient doses (often very closely spaced) and small N, and “see what they will get”. The interpretation of the final observed data is predictably unpredictable. With a poor design, it can be easily shown using simulation/re-estimation methods that the observed outcomes in the trial could support a wide range of “best” doses based on simple/naïve analysis (e.g. just picking the dose that happens to have the “best” observed outcomes). As well as wasting both the time and money of the sponsor by running such a trial that yields so little useful data, we must question the ethics of such trials.

When a patient enters such a trial, I think there is an onus on the sponsor to ensure the data generated from that patient will meaningfully contribute to the subsequent analysis. For example, is it ethically acceptable to randomise a patient to 0.5 mg if data that has already been collected on other patients across a wide dose range would show that 0.5 mg is essentially uninformative to our D-E-R understanding? It is my view that we should feel compelled to ensure the data from each and every patient is as informative as it can be; this means ensuring patients are receiving the most informative doses. In a mathematical sense, we can actually quantify how informative each potential dose level is, so for example 5 patients at 0.5 mg could be the same as 1 patient at 10 mg (as data in the “wrong” part of the D-E-R curve is much less useful than data in the “right” part of the D-E-R curve). Thus randomising the patient to 0.5 mg is both wasteful and, in my mind, unethical.

Adaptive randomisation can be motivated on purely economic grounds, where we learn the true location and shape of the (true) D-E-R relationships as quickly and efficiently as possible (saving both time and money), but also from the ethical perspective, where each patient is contributing as much as possible to the goal of the trial.

Two key points of adaptive randomisation need to be clarified:

What is meant by the best/most informative doses?

Who is unblinded to what data, and how are they changing the randomisation scheme?

When we discuss the best or most informative dose, we need to be precise in what this actually means with respect to D-E-R trials. When our goal is to accurately and precisely determine the D-E-R relationships, we need data at key doses on the D-E-R curve. For example, since the D-E-R for efficacy quantifies the magnitude of the changes versus placebo, we will indeed need some data at the bottom of the D-E-R curves (i.e. low doses and placebo). Thus by best, we mean most informative. We do not mean anything to do with “best” for a particular patient. Remember, these trials, like most clinical trials, are not explicitly trying to give the patient the “best” dose. This is not to say that the dose the patient is assigned to, or in the case of dose titration trials the dose they are titrated to, will not be “best” for them, but this is not our primary goal during this stage of clinical drug development; we are still in the learning phase. Medical ethicists have written much on the important interplay between the social and clinical value of clinical trials and the benefits and harms to individual trial participants. I plan to add a bonus chapter on this key topic to share with you my opinions; however in short, I subscribe to the viewpoint that we must look to minimise any harms to individual patients, but otherwise their participation in D-E-R trials is to facilitate the reduction on the uncertainty around the D-E-R relationships as effectively as possible. Thus I view each trial participant (patient) as a kind person who may derive no, some, or major benefit from the dosing regimen given to them, but who unquestionably will contribute to our better understanding of how to best use the drug going forward.

If we wish to use the accruing data to guide the adaptive randomisation, we will require unblinded experts to both analyse and interpret the accruing data, and to update the randomisation allocations. The team of experts will act in a similar role to an (independent) Data Monitoring Committee (DMC) (also sometimes referred to as a Data Safety Monitoring Boards (DSMB)). Regulatory advice on DMCs has been developed (see link below).

To be clear, it is in interest of all parties that we “learn as we go”; the drug company, the regulator, and the patients all benefit with this intelligent approach to running the trial. The drug company are most efficiently using their R&D dollars to quickly, accurately and precisely quantify the D-E-R relationships that both they and the regulators want to know. Patients within the trial are contributing fully to this effort and they, and future patients, may benefit from the best dosing regimens being approved based on a clear quantification of the D-E-R relationships for both benefits and harms for the drug.

The primary tasks of the team would be to:

Adapt the randomisation schedule based on the accruing data

Stop the trial for futility and/or efficacy

The ASTIN trial [1] is an excellent example of this type of adaptive D-R trial. In ASTIN Pfizer sought to determine the D-R of UK-279276 (a neutrophil inhibitory factor) on the Scandinavian Stroke Scale in patients with an acute ischemic stroke. They considered placebo and 15 dose levels from 10-120 mg. Although the D-R model was not something I would endorse, the adaptive design, conduct and ongoing analyses they employed were all excellent. Unfortunately the trial ended early for futility, as although the higher doses were targeted more heavily as the accruing data suggested poor efficacy across the dose range, ultimately all doses were insufficiently different from placebo to merit further investigation. From an operational perspective, this trial was remarkably successful; Pfizer learnt quickly and efficiently that the D-R for this drug was very poor across the whole dose range. I understand that senior management in Pfizer conflated the performance of the drug (poor) with the performance of the trial (excellent), ultimately deeming this a “failure”. Clearly paying for this trial but not getting an approval at the end would have been disappointing, but “failing fast” is a mantra of any business that develops high risk/high reward products, and this trial did its job brilliantly, and should be applauded. Had the drug worked, I think we would have seen an enormous uptake in these designs, with Pfizer leading the way (a real lost opportunity in my opinion).

I co-wrote a paper showing how adaptive randomisation can be used in combination with optimal design for D-R modelling [2]. The work showed that when our initial assumption of the location (ED50 = 10 mg) and shape (Hill=1) of the D-R was exactly correct, the adaptive design will, as expected, stick to the initial (optimal) dose levels that will most precisely estimate the D-R relationship. However in scenarios when the true location of the D-R was different to our initial assumption (e.g. ED50 = 5 mg or 20 mg), or the shape was different (Hill=0.5 or 2), adaptive randomisation would learn from the accruing data and adjust the dose levels accordingly, ultimately finding the best/most informative dose levels under the true D-R relationship.

The bottom line here is that employing adaptive randomisation to “learn as we go” is always the most efficient strategy, and is extremely valuable when our initial expectations on the location and shape of the D-R are incorrect. Given that is normal not to have an excellent understanding of the true location and shape of the D-R prior to running a D-R trial, I am astonished such trials are not routinely used.