4 A Brief History Of Drug Development; The Good, The Bad And The Ugly

At the end of this chapter, the reader will understand:

Why randomised controlled trials (RCTs) are considered the gold standard for assessing new drug regimens through their use of randomisation, blinding, and control arms.

Understand how clinical drug development is based on a series of RCTs nominally categorised as phase 1, phase 2 and phase 3 trials.

Why RCTs are not agricultural experiments, and patient are not fields! If we care about individual patient outcomes, we need to move beyond average outcomes based on the same fixed-dose regimen for all patients.

Why drug development is an estimation problem, rather than a null hypothesis significance testing (NHST) problem.

Why off-label drug use and some placebo controlled trials are unacceptable.

It is valuable to briefly reflect on the history of clinical trial design and drug development, and how this has evolved into the general drug development paradigm we see today; by looking back, we can see where we went right, and where we went wrong. Initially we will focus on the good.

Randomised controlled trials (RCT) are generally regarded as the gold standard by which we measure the benefit-risk of new experimental drug regimens. The first modern RCT is generally reported to be the 1948 General Medical Council trial that assessed the efficacy of an antibiotic, streptomycin, for the treatment of pulmonary tuberculosis [1]. The goal of this trial was to evaluate whether 500 mg of streptomycin given every 6 hours over 4-6 months improved survival versus standard of care.

Five key components that made this a “modern” trial was the application of:

Inclusion/exclusion criteria

Randomisation

Blinding

A control arm

A formal statistical analysis

Their report listed how patients were required to satisfy a number of features/criteria that we would now refer to as inclusion/exclusion criteria. The authors added:

“Such closely defined features were considered indispensable for it was realized that no two patients have an identical form of the disease, and it was desired to eliminate as many of the obvious variations as possible.”

Although there is now a strong desire to ensure patients in modern clinical trials are truly representative of patients who may subsequently be given the drug, the aim of a clear and focussed experiment in a relatively homogeneous group of patients was reasonable in 1948.

The trial statistician, Austin Bradford Hill, utilised a novel type of randomisation that we would now refer to as a stratified block randomisation. This was in place of the older, and inferior, alternating randomisation that was more commonly used previously [2].

Although they were primarily motivated to ensure any selection biases were minimised, the use of randomisation is now universally recognised as a pillar of sound clinical trial design.

Blinding is typically concerned with ensuring treatment allocations are concealed either for just the patient (single blind) or the physician and patient (double blind), with the aim to minimise any conscious or unconscious biases is the reporting of responses. A control arm is the use of a group of patients who do not receive the experimental drug regimen. These patients may receive a placebo or standard of care, and serve an essential purpose of providing a reference group from which to benchmark the results from the experiment drug regimen. The streptomycin trial utilised a control group, and these patients received the normal standard of care. A rudimentary (by modern standards) blinding effort was used insofar as the control group were unaware they were the control group for the streptomycin group of patients! The use of double blind trials is a feature of many modern clinical trials, utilising further methods such as “double dummy” regimens to mask any differences between the treatment arms. Control arms are, rightly, ubiquitous in modern RCTs, and few would disagree that standard of care type reference arms provide a solid basis for determining the comparative effectiveness of novel experimental drug regimens.

Finally, the trial results showed that 7% (4/55) of the streptomycin treated patients and 27% (14/52) of the control patients died within 6 months, a difference they reported as:

“…statistically significant; the probability of it occurring by chance is less than one in a hundred.”

Here we see the adoption of the Fisher/Pearson null hypothesis significance testing (NHST) approach to the interpretation of the results. Although I will subsequently argue that we should view all clinical trial results from an estimation perspective rather than a NHST perspective, the use of the NHST approach by Hill looked to inject statistical rigour to the interpretation of the observed treatment differences, and hence must be applauded.

This rather long discussion of the 1948 streptomycin trial is included because it both serves to highlight many of the successful aspects on modern clinical trials, but also because this type of trial design has inadvertently led to some of the failings I see in modern drug development. Before embarking on the details on these failings, we should also briefly introduce how RCTs fit into the broader clinical drug development programs we see today. The development of a new drug will involves conducting a series of RCTs, and these are broadly grouped into the three phases of drug development:

Phase 1 trials are precisely controlled trials where small cohorts of individuals receiving a single dose or multiple doses of the drug. These include the so-called first in man trials (FIM), single and multiple assessing doses trials (SAD and MAD), along with additional clinical pharmacology trials designed to answer essential drug development questions (e.g. renal/hepatic impairment, drug-drug interactions, bioequivalence, QTc trials etc.). Phase 1 trials are normally conducted in healthy individuals, with oncology being a notable exception. Treatment durations are typically days to weeks.

Phase 2 trials are larger trials in patients. These are typically called “dose ranging” or “dose finding” trials, as they investigate a range of dosing regimens; they may also include a placebo and/or an active control arm. Treatment durations are typically week to months. The goal/design is typically stated as to quantify the D-R relationships (more on this later!).

Phase 3 trials are the largest trials, again in patients. In these trials it is common that only 1-2 doses are compared to placebo / standard of care. Treatment durations are typically months to years. These are often called pivotal trials, since their results will be used to support the approval for the dosing regimens investigated. The goal/design is often based on achieving a statistically significant difference in favour of the new drug over placebo/standard of care for a primary efficacy endpoint.

For the interested reader, this book chapter provides additional background on the history of the drug development process and the role of statistics/statisticians (Statistics and the Drug Development Process). Importantly, it charts how we have continually improved drug development for the better.

We now have sufficient background to discuss the 1948 streptomycin RCT trial in more detail, before discussing the bad in the design and analysis of many modern RCTs and drug development programs.

Back in 1948 I am sure there was abundant quackery in the reported benefits of experimental drugs, along with a plethora of anecdotal “evidence” based on limited data from either individual patients or small groups of patients. The streptomycin trial therefore represented an important shift in how new experimental drug regimens were assessed, one centered on good experimental design principles and robust statistical analysis. The design and language used to describe the trial have the “fingerprints” of the foundational work from the Rothamsted Experimental Station on the design and analysis of agricultural field trials from the 1920s onwards, of which Ronald Aylmer Fisher was a leading figure. If you are not familiar with the history of modern statistical analysis, much can be traced back to these early pioneers and innovators. At the time, their “experimental unit” was typically a field or animal, and “yield” was often the key response of interest. Randomisation, Latin square designs, balanced incomplete block designs, factorial designs and hierarchical/nested designs were central components to experimental design, with corresponding analyses based on describing sources of variation (e.g. ANOVA), P values and statistical significance. Their goal was often to determine what combinations of agricultural varieties/practices and quantities of agrochemicals (fertilizers, insecticides etc.) generated, on average, the best yield of a crop. Minimising “variation” between experimental units was seen as an invaluable aid in distinguishing real treatment effects from random field-to-field or animal-to-animal variation.

Farmers, at least in the 1920s, were not interesting in the specific results of any individual field, but rather in obtaining the best average yield across all fields. It would be unlikely that any farmer would have had the time or energy to consider “personalised” treatments for each field; rather all fields should receive the same basic treatment. For example, fields generate some yield without agrochemicals, but with agrochemicals this could be improved; they were turning a good outcome into a great outcome. In addition, treatments needed to be simple to ensure they could easily be rolled out to the farmers.

In agriculture, the focus on average outcomes across groups of fields with the same treatment that is simple to use makes sense.

In drug development, the focus on average outcomes across groups of patients with the same treatment that is simple to use does not make sense. Why? Because we must care about individual patient outcomes. Some flexiblility with dosing is a small price to pay for better patient outcomes.

We can therefore list 6 key considerations when we plan the design and analysis of modern, patient-centric, RCTs:

We need to look beyond average outcomes with the same, simple drug regimen.

We must care about (and hence optimise) individual patient outcomes; patients are not fields!

Patients will normally need different doses.

Drug development needs different/better designs and analyses than basic agricultural designs and analyses. Simple does not always suffice.

Patient heterogeneity is not a “nuisance” variation; it is real and important.

Estimation, and not significance testing, is the goal of the analysis.

Regarding patient heterogeneity, the 1948 streptomycin report specifically commented on “eliminate…variations” regarding the inclusion/exclusion of patients. In modern drug development, we should see all patient groups who may ultimately receive the approved drug as candidates for recruitment in the supporting drug trials. Thus inclusion/exclusion criteria should not be used to “reduce variation” in the patient population; this is a clear distinction between agricultural trials were homogeneous fields may be appropriate, and drug development for patients, where we seek the best dosing regimens for each one of our heterogeneous patients (we want solutions for all patients). The idea that drug regimens should be approved for a wide range of patients based on trial results from a select, narrow subgroup of the wider patient population is both incoherent and unacceptable. For example, if an 80-year-old patient on multiple medications would be considered a candidate for the drug post-approval, then should we not have obtained data from similar patients within our well-controlled clinical trials pre-approval, where a multitude of efficacy and safety parameters would have been collected and analysed? Ignorance post-approval by sidestepping such patients pre-approval is wrong. This is not to say that inclusion/exclusion criteria cannot be more restrictive earlier in the development of a drug, when both efficacy and safety data is limited. However at some stage pre-approval we must collect data in a patient population that is truly representative of those who will ultimately receive the drug post-approval.

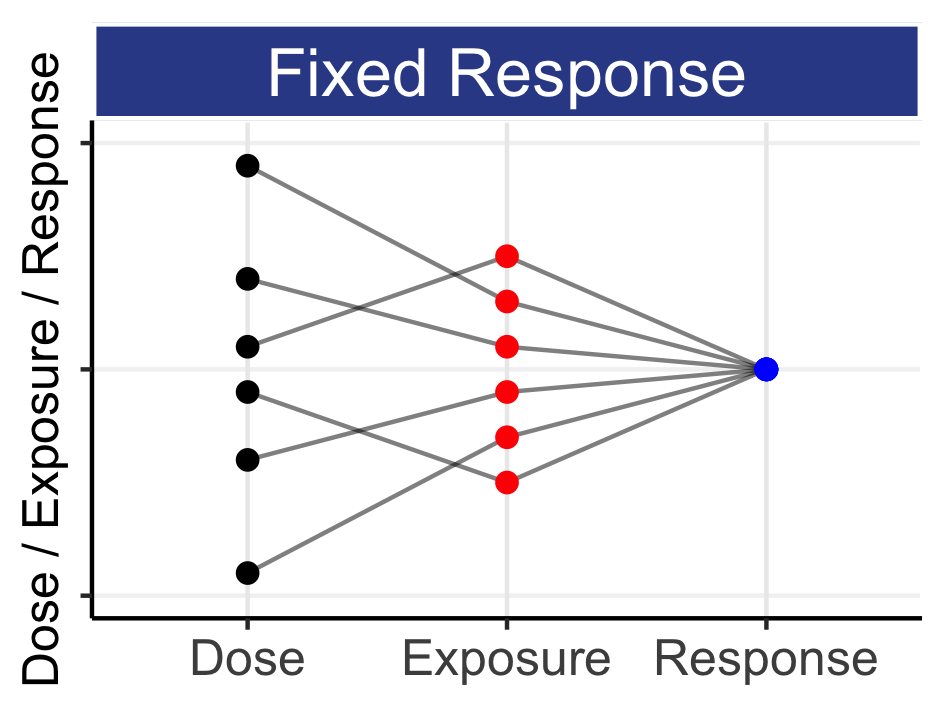

Throughout this book, the emphasis is always one of estimation, not of significance testing. The significance testing approach to design/analysis is concerned with being able to reject statements such as “the drug effect is not zero,” whereas the estimation approach is concerned with quantifying how measures of efficacy and safety/tolerability change as a function of the dosing regimen. Estimation is concerned with accurately and precisely answering the “how much” question. With significance testing, the idea of collapsing a distribution of an estimate of interest (like a treatment difference) to a binary yes/no based on whether the P value is less than 0.05 is unhelpful. Thus the notion that two placebo controlled phase 3 trials with P<0.05 should be acceptable for regulatory approval is laughable. The drug company has only successfully demonstrated (twice) that the drug effect is not zero for a single primary efficacy endpoint! This is no “gold standard”. For any meaningful assessments of the benefits and harms, we need to precisely quantify how multiple efficacy and safety/tolerability endpoints change as a function of the dosing regimen (i.e. across a range of doses if we wish to determine a suitable dose range for approval). In cases where only a single dose level is investigated in phase 3, there is clearly no opportunity for either the sponsor or the regulator to determine whether the dosing regimen is, in any way, optimal. Such weak data also inhibit any understanding of how steep or flat the actual D-E-R relationships are, and hence we are unable to quantify the risks to patients who have particularly high drug exposures, or inadvertently take a higher dose than planned. Frankly, this is just poor drug development.

We have covered a number of good features of modern RCTs such as randomisation, blinding and control groups. We have also mentioned some of the bad features, such as focusing on average outcomes rather than individual outcomes, the fixation on simple dosing regimens, and the overuse of statistical testing and P values (rather than focussing on the precise estimation of the effects for different dosing regimens). In the final section I will briefly cover some of the ugly features. The list could be longer, but I wanted to draw attention to 3 particular failures that I find unacceptable. As an ethical scientist, these do not sit well for me, and I hope you would agree.

Firstly, is it use of “off-label” dose regimens, both for adults and children. The routine use of off-label doses is the proverbial “elephant in the (regulator’s) room,” and contravenes the fundamental principles of evidence-based medicine. For example, at the European Medicines Agency Dose Finding Workshop meeting in December 2014, one physician stated how he used most antipsychotics at doses substantially different from the approved doses. I expected the room to explode into discussion. Instead, there was no discussion, and the report simply noted for this presentation:

“This means that the benefit/risk balance of the ‘real’ doses has never been subject to regulatory scrutiny.”

Is it acceptable that we routinely expose individual patients to doses beyond those studied and documented? Is this “wild west” approach to dosing, in which “anything goes,” acceptable? In short, drug companies and regulators cannot sit in ivory towers with pieces of paper saying how the drug should be used, whilst allowing patients to be given untested dosing regimens by well meaning but misguided physicians. If novel dosing regimens are to be tested, surey these must be investigated in appropriate RCT that have obtained ethics approval and informed consent.

Secondly, is the overuse of placebo controlled trials in therapeutic areas where numerous, effective treatments exist. For example in type 2 diabetes, patients enter clinical trials with significant hyperglycemia. Despite over 40 drugs being approved for type 2 diabetes, we continue to randomise patients to placebo. Given sitagliptin is a modestly effective drug with an excellent safety profile, surely we should replace placebo with sitagliptin (or any other approved treatment)? Sitagliptin is one drug in a large model-based meta-analysis I have conducted [3] including over 300 trials, of which over 40 trials included over 12000 sitagliptin treated patients; we know how effective sitagliptin is, and should use this information instead of continuing to randomise patients to placebo (see the diabetes MBMA here (tip: if you follow any link, use the “back” button in your browser to return to where you were)). In psoriasis, again it is common to randomise patients to placebo, despite over 15 drugs being approved. Psoriasis can be an awful, debilitating disease, and patients with psoriasis who enter clinical trials are in real need of effective treatment; many are in daily pain. We should not consider it acceptable to expect patients to endure 4 months of ineffective placebo treatment when a whole host of effective treatments are available. Whilst we may find it “convenient” and “easy” to continue with placebo arms, I would simply ask you this “Would you be happy to see you son or daughter receive placebo in such a psoriasis trial, when a plethora of effective treatments exist?”

The final ugly reality of modern drug development is perhaps less heinous than those above, but something that continues to infuriate me! When we run any clinical trial in patients, the trial must be ethical; it must be able to generate meaningful and useful data to answer the questions that it seeks to address. Poor experiments in humans should not be tolerated. So let’s talk about phase 2 “dose-finding” trials. If I had a dollar for every time I read the objectives of a trial as:

“To assess the dose-response relationship of [DRUG] on [ENDPOINT] in subjects with [INDICATION]”

Only to then see a terribly designed and analysed D-R trial, I would be many hundreds, perhaps thousands, of dollars richer.

Since this is so important, the whole next chapter is devoted to this topic!